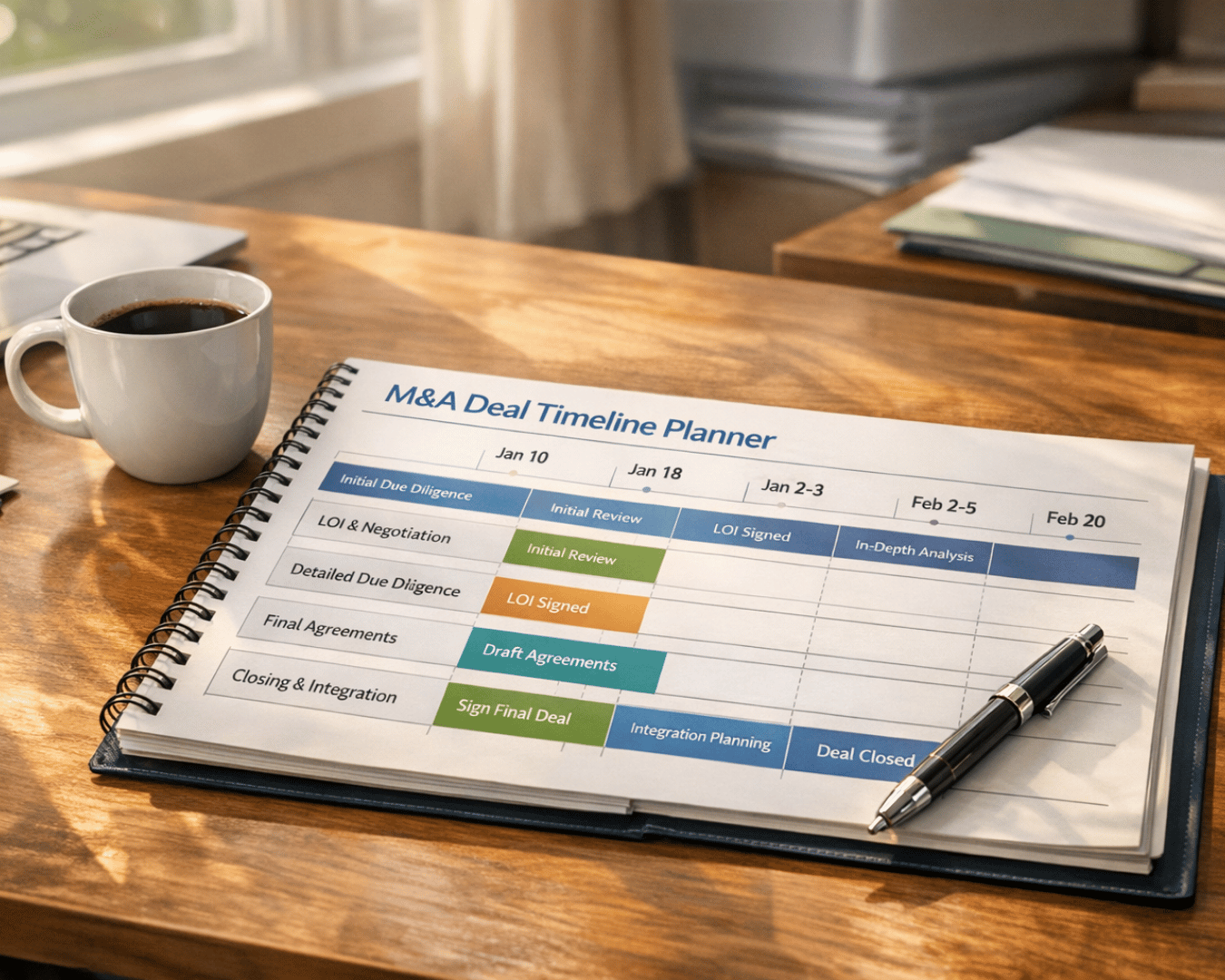

Want to make smarter M&A decisions? Start by looking beyond the financial statements. Professional consultation services can help you navigate these operational complexities. Throughput metrics - like cycle time, lead time, and work-in-process (WIP) levels - offer a clear view of how efficiently a company operates. While revenue growth might look great on paper, these metrics reveal if operations are scalable or riddled with inefficiencies.

Here's what you need to know:

- Cycle Time: Measures how long it takes to complete a single step in a process.

- Throughput Rate: Tracks how many units are produced or tasks completed in a set time.

- WIP Levels: Indicates the amount of unfinished inventory or tasks, often signaling bottlenecks.

These metrics help identify hidden risks, such as overworked systems, quality issues, or unbalanced workflows. By using tools like IoT data, ERP exports, or time-and-motion studies, you can uncover operational realities that impact valuation and integration plans using secure deal rooms.

Key takeaway: Throughput metrics aren’t just technical details - they’re the foundation for making informed M&A decisions, ensuring you don’t overpay or miss critical risks.

Operations Diligence in M&A | EBITDA Improvement | Mergers and Acquisitions | Grant Thornton

sbb-itb-a3ef7c1

Core Throughput Metrics for Due Diligence

When assessing a target company's operations, there are three key metrics that provide a clearer picture of how efficiently work flows through the business. These metrics go beyond financial statements, offering insights into the operational health of the organization.

Cycle Time

Cycle time measures how long it takes to complete each step of a process, starting from when work begins on a task to when that step is finished.

Analyzing cycle time during due diligence can help pinpoint inefficiencies that slow down operations. Tools like time and motion studies or value stream mapping can separate activities that add value from those that don’t. Often, real-world performance strays far from what the Standard Operating Procedures (SOPs) dictate. For example, an assembly step listed as requiring 12 minutes in the manual might actually take 18 minutes due to equipment breakdowns, insufficient training, or improvised employee workarounds.

"Efficiency in manufacturing and service operations largely depends on how well processes are designed, sequenced, and managed to achieve optimal throughput." - Umbrex

You might also find that a product spends the majority of its cycle time - up to 80% - sitting idle between stations rather than being actively worked on. This is a red flag indicating poor process design, which can inflate costs and delay delivery times.

After understanding cycle time, the next step is to evaluate throughput rate, which measures the overall output of the operation.

Throughput Rate

Throughput rate quantifies how many units a company produces or tasks it completes within a specific time frame, such as per hour, per shift, or per day. This metric highlights the company’s operational capacity and its ability to meet demand without compromising quality.

For instance, a distribution center might handle 5,000 orders daily under normal conditions. But what happens during a seasonal rush? If meeting peak demand requires excessive overtime and results in quality issues, it signals scalability challenges.

Using queueing theory and simulation models, you can pinpoint bottlenecks in the process. Bain & Company documented a case in September 2024 where a global manufacturer used industry benchmarks and external diligence to uncover cost synergies that were double their initial estimate. This deeper operational analysis allowed the company to make a competitive acquisition offer and exceed their synergy targets post-acquisition.

Advanced tools like IoT device logs and real-time event streams offer granular insights into throughput. These tools can uncover trends that monthly reports might miss, such as mid-shift productivity dips or underperforming product lines.

In addition to throughput rates, monitoring work-in-process levels is critical for identifying bottlenecks and understanding flow constraints.

Work-in-Process (WIP) Levels

WIP levels track the amount of materials or tasks currently in progress but not yet completed. High WIP levels often signal operational inefficiencies and bottlenecks.

For example, excessive WIP can occur when one station processes 100 units per hour, but the next step can only handle 70 units. This mismatch creates a bottleneck, causing work to pile up. In some cases, management may overlook long-standing constraints, leading to inflated lead times and operational delays.

The Theory of Constraints provides a framework for identifying the "drum" process - the step that determines the pace of the entire operation. If other processes aren’t aligned with this constraint, WIP will inevitably build up. Modern digital dashboards allow for real-time WIP tracking, offering a significant advantage over outdated manual methods.

High WIP levels aren’t just operational headaches - they directly impact financial health. Materials stuck in production represent tied-up cash that could otherwise generate returns. When calculating a company’s operational efficiency, WIP levels play a crucial role in cash flow projections and working capital adjustments, both of which are critical for valuation.

How to Collect and Analyze Throughput Data

Methods for Gathering Data

Start by requesting key documents such as process diagrams, standard operating procedures (SOPs), cycle and lead time data, quality records, and shift schedules. These materials provide a foundational understanding of how operations are intended to function based on the company’s own standards.

Leverage digital tools to extract real-time data from operational systems. Exports from ERP platforms, IoT device telemetry, and transactional event streams can uncover details often missed in monthly reports. For instance, IoT data might reveal mid-shift productivity drops or equipment downtime patterns that aggregated reports fail to highlight. Use advanced tools like Apache Spark to sift through unstructured historical data and uncover untapped operational insights.

On the ground, apply techniques like value stream mapping and time-and-motion studies at critical stations. These methods visually pinpoint where time is spent and where value is actually added, helping prioritize areas for improvement. Additionally, gather at least three years of financial and operational reports, including monthly management summaries, to identify trends that annual statements might obscure.

| Data Collection Method | Tools | Key Insight Provided |

|---|---|---|

| Historical Review | ERP exports, Production logs, SOPs | Baseline cycle times and lead times |

| On-Site Observation | Time & Motion studies, VSM | Verification of data accuracy vs. real-world operations |

| Advanced Analytics | AI, IoT telemetry, Spark/Hadoop | Real-time signals and event-driven forecasting |

| Primary Research | Employee interviews, Observations | Identification of operational bottlenecks |

Comparing Metrics to Industry Standards

Relying solely on internal data can paint an incomplete picture. To understand a company's competitive standing, incorporate external benchmarks, primary research, and data scraping. For example, in September 2024, a global manufacturing company used external data scraping and primary research during due diligence. This approach uncovered cost synergies more than double the initial estimate, leading to a successful acquisition and synergy realization.

For roll-up strategies, compare the target’s efficiency - such as production costs and lead times - against the best practices within your existing portfolio. Internal benchmarking can quickly highlight synergy opportunities. Use the Theory of Constraints to identify the "drum" process that dictates the operation’s pace, and evaluate whether supporting processes align with this constraint’s throughput. Balanced takt times across stations signal efficient operations, while mismatches highlight areas needing improvement.

"The best way to succeed is to come armed with proprietary insights from diligence that are faster, deeper, and more focused than your competitors." - Bain & Company

Once insights are gathered, validate them through direct observation to ensure they align with operational realities.

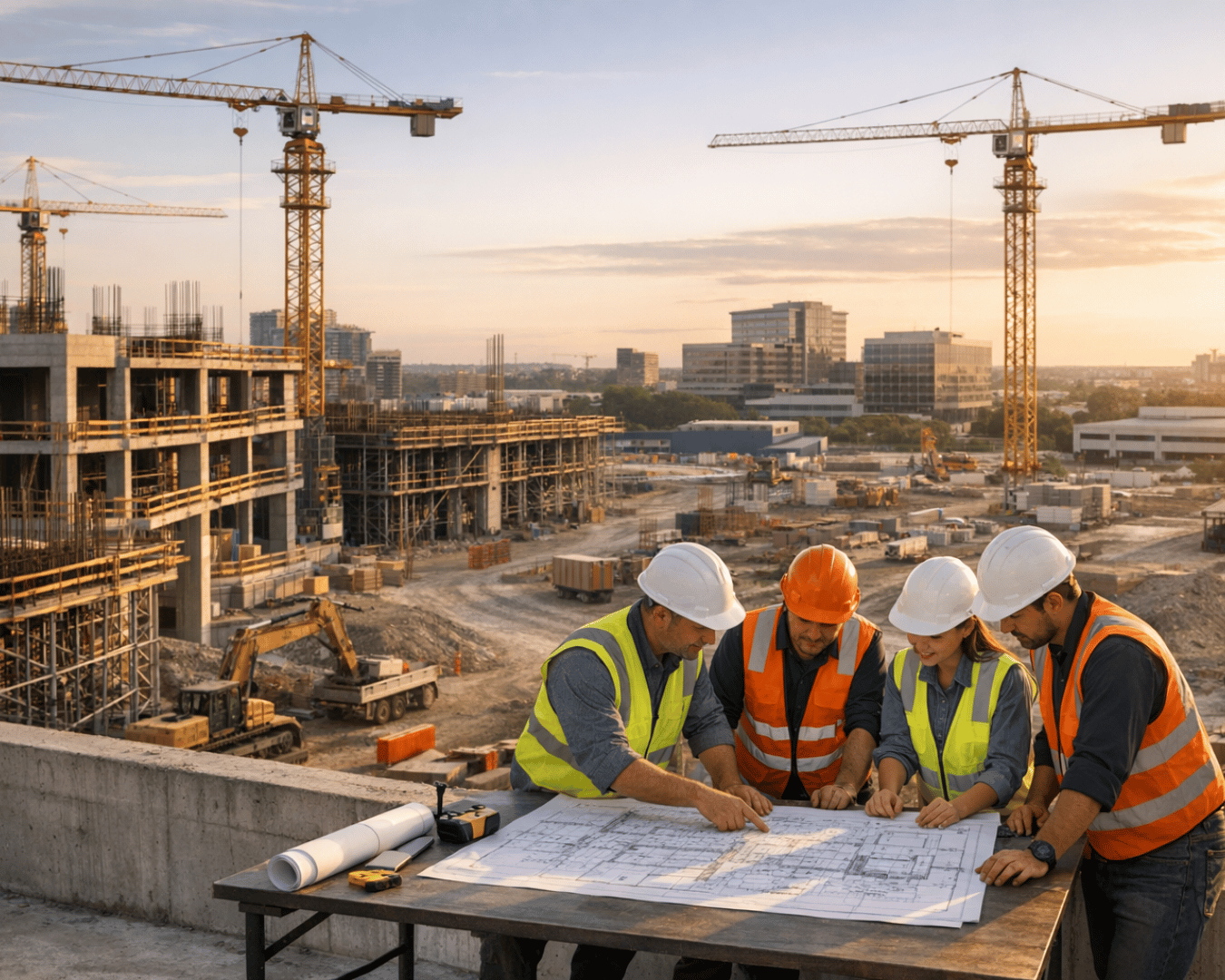

Verifying Data Through Site Visits

External benchmarks provide a performance baseline, but on-site visits are crucial for verifying their accuracy. Never rely solely on reports. Site visits reveal operational truths often missing in documentation. During these visits, conduct time-and-motion studies at key stations to measure actual cycle times. Compare these findings with documented SOPs to uncover discrepancies, hidden rework loops, or manual workarounds that drive up costs.

Speak with frontline operators to identify labor dependencies, capacity constraints, and manual processes that digital logs might overlook. Look out for manual or paper-based tracking systems - these often signal inefficiencies and incomplete data. Cross-check physical production boards or digital dashboards on-site with historical records to confirm the accuracy of real-time reporting. Assess the facility layout as well; U-shaped or linear assembly cells are generally more efficient for material flow. If using AI or machine-learning insights, validate them with human-led audits and expert judgment to ensure the findings are defensible.

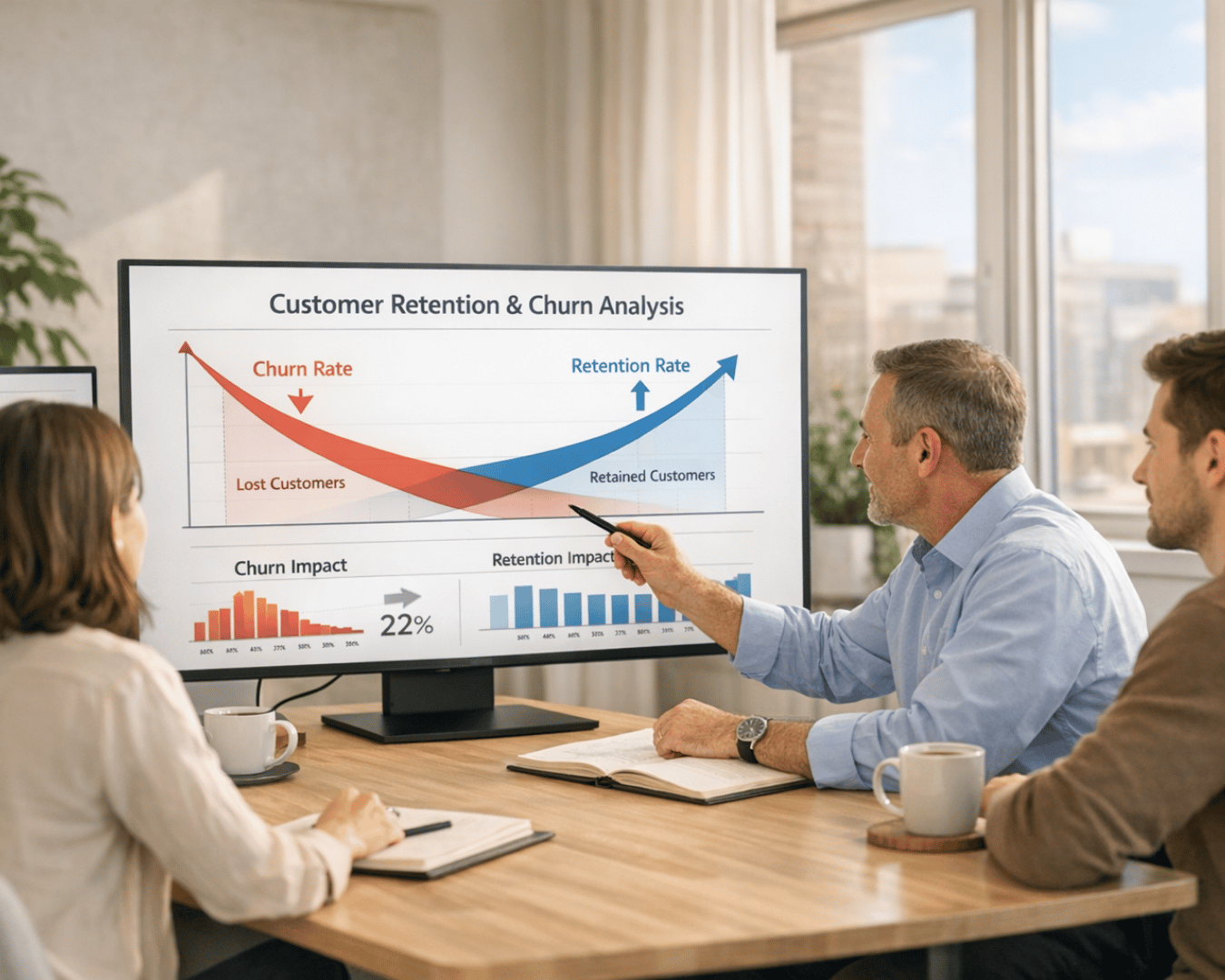

Warning Signs in Throughput Metrics

Declining Throughput Over Time

A steady drop in throughput over multiple quarters is a clear warning sign. It often points to deeper issues, such as quality problems that result in high rates of scrap and rework. Don’t just focus on the headline numbers - dig deeper to see if revenue growth is being driven by short-term fixes or unsustainable practices.

"The sale pitch will tell you that the company is growing at 10% annually, while due diligence will tell you that this growth has been achieved through overtrading." - DealRoom

Quality issues can create repetitive loops that inflate costs and extend cycle times. Similarly, if the company lacks evidence of continuous improvement programs like Lean or Six Sigma, it may indicate a culture that prioritizes quick fixes over long-term solutions.

These patterns often lead to other operational problems, such as inconsistent performance and excessive work-in-process levels.

High Variability in Performance

When throughput fluctuates wildly, it’s a sign of unreliable operations. This kind of variability makes it tough to project cash flows accurately, increasing the risk of overpaying for an acquisition. In fact, up to 25% of M&A deals are abandoned during the pre-completion phase, often because unexpected performance swings come to light. Such instability not only complicates valuation but also hints at deeper operational challenges.

Variability also creates headaches when it comes to integration. Without standard operating procedures (SOPs), merging inconsistent workflows into a unified system becomes a tall order. The lack of tools like production boards or digital dashboards - essential for tracking real-time throughput - suggests that the company may not be monitoring its performance closely enough to address issues early.

Elevated Work-in-Process Levels

High levels of work-in-process (WIP) inventory are another red flag. They often point to unbalanced workflows and bottlenecks. This buildup can stretch lead times, delay customer orders, and tie up working capital that could be better used elsewhere.

Excessive WIP can mask deeper problems, such as rework, scrap, or idle time, all of which increase costs without adding value. Inflexible production lines - designed for a narrow product range - can also lead to WIP accumulation when demand shifts, especially if lengthy changeovers or retooling are required. During site visits, inefficient layouts that cause excessive material movement can make these issues worse. Additionally, reliance on highly specialized labor can create capacity constraints and amplify throughput variability.

Using Throughput Metrics for Valuation and Integration

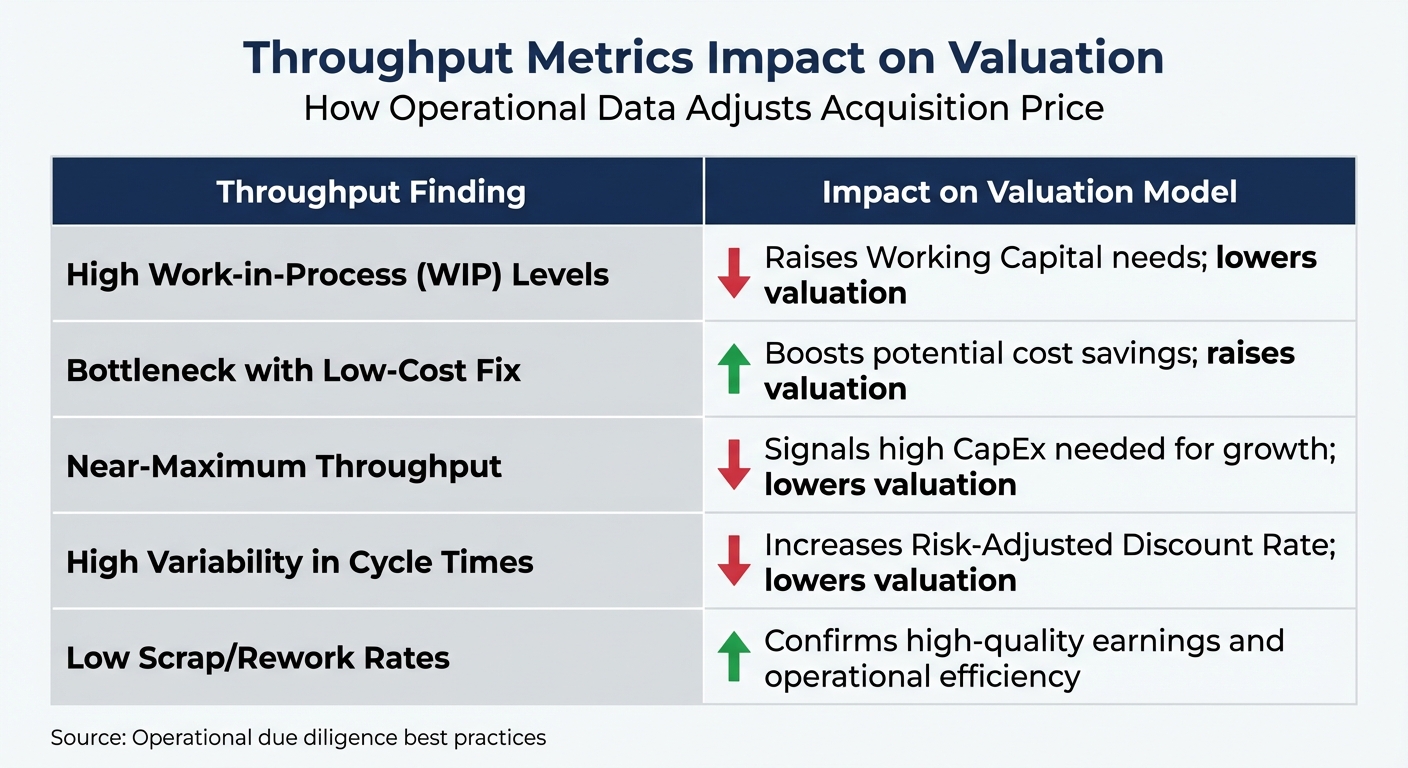

How Throughput Metrics Impact M&A Valuation: Key Findings and Adjustments

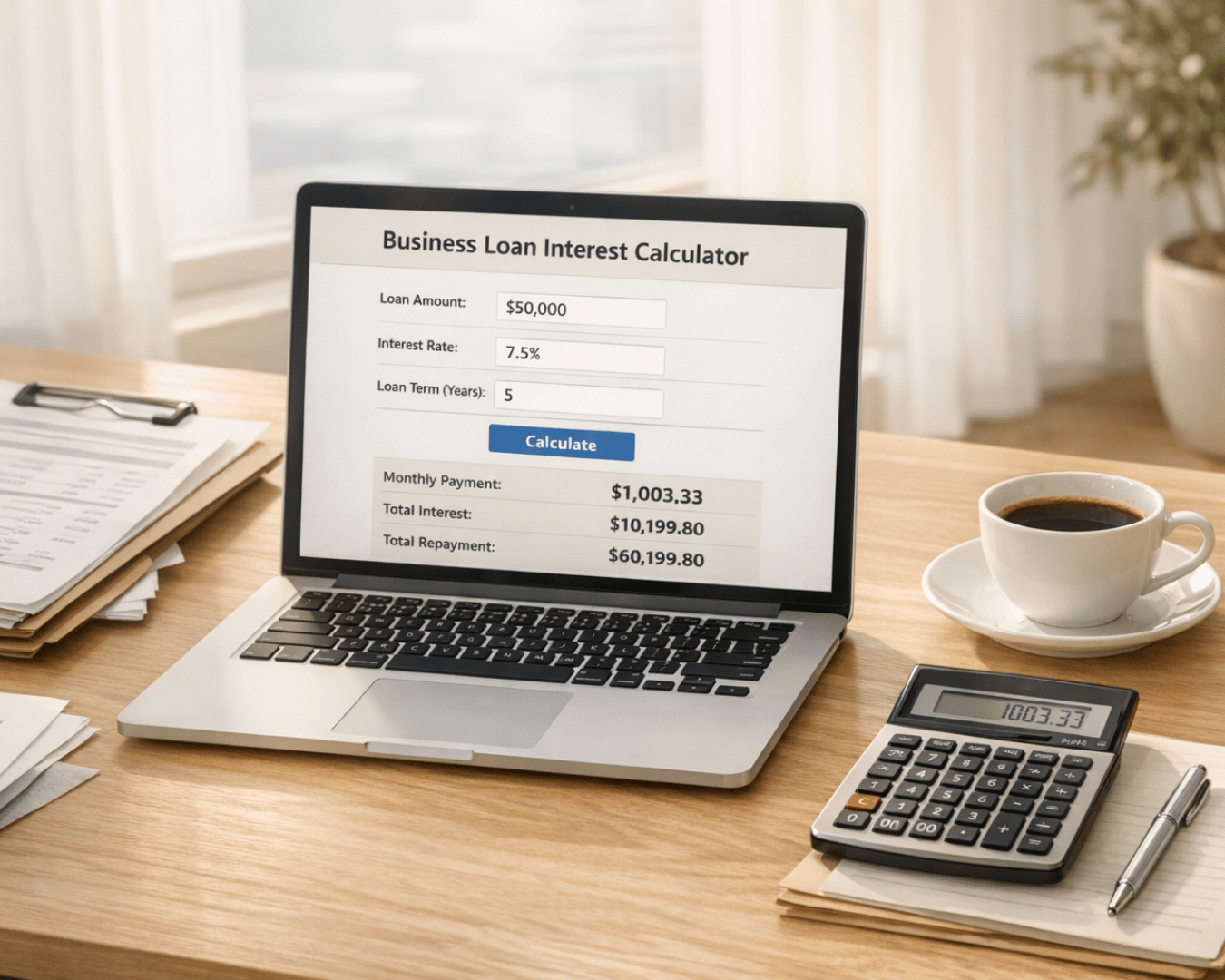

Adjusting Valuation Based on Throughput Data

Throughput metrics play a key role in determining the right acquisition price. During due diligence, identifying bottlenecks, rework loops, or idle time helps quantify potential cost savings - your cost synergy estimate. Essentially, this is the money you expect to save once the deal is finalized. Studies show that a thorough operational analysis can even double these estimated synergies.

The same principles apply to revenue projections. For instance, if the seller claims 15% annual growth but throughput data reveals production is already operating at 95% capacity, those growth predictions are unrealistic without significant capital investment. In such cases, you may need to reduce the offer price or account for additional capital expenses. Additionally, high variability in cycle times indicates operational instability, which should increase the discount rate in your financial model, ultimately lowering the company's present value.

Here’s a quick breakdown of how specific throughput findings can impact valuation:

| Throughput Finding | Impact on Valuation Model |

|---|---|

| High Work-in-Process (WIP) Levels | Raises Working Capital needs; lowers valuation. |

| Bottleneck with Low-Cost Fix | Boosts potential cost savings; raises valuation. |

| Near-Maximum Throughput | Signals high CapEx needed for growth; lowers valuation. |

| High Variability in Cycle Times | Increases Risk-Adjusted Discount Rate; lowers valuation. |

| Low Scrap/Rework Rates | Confirms high-quality earnings and operational efficiency. |

Modern valuation frameworks often incorporate real-time IoT data to create probabilistic models rather than relying on single-point estimates. These models provide a range of valuations based on various performance scenarios, offering a clearer understanding of both risks and potential upsides.

Tracking Throughput Metrics After Acquisition

Once the deal is done, the focus shifts from valuation adjustments to monitoring operational performance. For those looking to manage deal flow and scale effectively, having the right tools is essential. Throughput metrics become your go-to tool for ensuring those projected synergies turn into reality. Start by establishing baseline metrics within the first 30 days, such as cycle time for each process step, average and peak throughput rates, WIP levels, and defect or rework rates. These initial measurements will help you determine whether operations are stabilizing or struggling under new ownership.

Top-performing acquirers aim to capture about 30% of synergies within the first 90 days of integration. To meet this goal, consider implementing digital dashboards or production boards that display real-time throughput data. These tools allow operators and managers to immediately spot and address slowdowns. For example, Nokia successfully raised its cost-saving target from €900 million in 2016 to €1.2 billion by 2018 during its merger with Alcatel-Lucent, largely by aggressively tracking and improving operational metrics.

Focusing on the "drum" process - the bottleneck that dictates the system's pace - can significantly boost overall performance. Cross-training employees to step in at bottleneck stations during peak demand periods is another effective strategy. Additionally, setting up rapid escalation protocols ensures that quality defects or throughput drops are addressed immediately when flagged on dashboards. These steps are critical to achieving the operational improvements factored into your initial valuation.

Conclusion

Throughput metrics shift M&A due diligence from a simple document review to a detailed, data-driven operational analysis. By examining cycle times, throughput rates, and work-in-process (WIP) levels, you uncover hard evidence of how efficiently a target company truly operates - beyond the polished narratives found in a seller's pitch deck. This approach highlights the operational realities that often remain hidden beneath the surface.

And the results speak for themselves. For instance, in September 2024, a global manufacturing company used outside-in diligence combined with external data scraping to assess a potential acquisition. The analysis revealed over 200% more cost synergies than initially estimated. This kind of insight comes from operational data that pinpoints bottlenecks, waste, and unused capacity - areas that can significantly impact valuation and integration strategies.

AI-powered platforms have made this type of analysis faster and more accessible. With 90% of the world’s data generated in just the past few years, buyers now have access to real-time data streams from IoT devices, transactional logs, and digital production systems - resources that were unavailable a decade ago. Yet, despite this abundance of data, only 8% of businesses currently adopt advanced analytics practices. For those who do, the competitive advantage is undeniable.

For smaller deals, such as those in the Main Street or Lower-Middle-Market segments, tools like Clearly Acquired simplify the process. These platforms integrate AI-assisted financial analysis, secure data rooms, and deal management features to help buyers spot operational risks early. This early detection allows for timely valuation adjustments or even reconsideration of an acquisition - before any capital is at stake.

Whether you're making your first acquisition or expanding an existing portfolio, throughput metrics provide the operational clarity needed to separate successful deals from costly missteps. The real challenge lies in knowing what to measure, where to focus, and how to turn those insights into actionable strategies for valuation and seamless post-close integration.

FAQs

How do throughput metrics impact M&A valuation and integration planning?

Throughput metrics - essentially a measure of how efficiently a business produces goods or delivers services - are crucial for both assessing a company’s value and planning the integration process after an acquisition. During due diligence, these metrics provide insight into a target company’s ability to scale operations, maximize asset use, and identify areas for potential cost reduction. For example, high throughput and effective capacity utilization can support a higher valuation, while inefficiencies or production bottlenecks might highlight risks that should influence the deal’s structure.

Once the acquisition moves into the integration phase, throughput metrics become a roadmap for improving processes. They help pinpoint bottlenecks, eliminate redundancies, and address inefficiencies. This allows acquirers to make informed decisions about where to invest - whether that’s in automation, reallocating labor, or upgrading systems. By tying these operational insights to financial goals, throughput metrics help the combined business hit synergy targets, such as achieving 10–15% cost savings within 18 months, all while ensuring operations continue to run smoothly.

Why is it risky to overlook throughput metrics during M&A due diligence?

Overlooking throughput metrics during M&A due diligence can leave buyers vulnerable to unseen operational inefficiencies that could chip away at the value of the deal. If you don’t examine how smoothly products or services flow through the target company’s operations, you might miss critical issues like bottlenecks, delays, or unnecessary costs. These kinds of problems often translate into missed revenue goals, slimmer profit margins, and unplanned expenses after the deal closes.

Neglecting throughput also complicates the process of evaluating scalability and forecasting potential synergies - both of which are essential for realizing the deal’s full value. Buyers could end up overestimating the target’s operational capacity or underestimating the effort and expense required for integration. The result? Outcomes that fall short of expectations. By incorporating a thorough throughput analysis early in the diligence process, buyers can pinpoint operational constraints, sidestep costly surprises, and set the stage for a more successful transaction.

How do digital tools improve the analysis of throughput metrics during M&A due diligence?

Digital tools have revolutionized the way throughput metrics are analyzed, making the process faster and more accurate. By converting raw data into practical insights, tools like process-mapping software and AI-driven analytics help pinpoint operational issues such as bottlenecks, idle times, and capacity limits. They can even model potential improvements - like adjusting staffing levels or upgrading equipment - to identify the most cost-effective solutions.

M&A-specific platforms, such as Clearly Acquired, take this functionality to another level. These platforms incorporate AI-powered tools that automatically process operational data, deliver detailed throughput reports, and recommend efficiency enhancements. This streamlined approach helps buyers evaluate operational risks with greater precision, simplify due diligence, and make well-informed decisions to boost performance after the acquisition.

%20%20Process%2C%20Valuation%20%26%20Legal%20Checklist.png)

%20in%20a%20%2420M%20Sale..png)

%20vs.%20Conventional%20Loans%20for%20business%20acquisition.png)

.png)

.png)

.png)

.png)